Xinyang Liu (刘昕洋)

Incoming PhD,

Statistics and Data Sciences (SDS)

The University of Texas at Austin

Email: xinyangATK [AT] gmail [dot] com

Google Scholar | Github | Twitter

Bio

Howdy! I am the first-year PhD student at The University of Texas at Austin  , advised by Prof. Mingyuan Zhou. I’m also work closely with Dr. Ruqi Zhang, an Assistant Professor at Purdue University. I received my M.S degree from Xidian University in 2024, advised by Prof. Bo Chen. Previously, I obtained my B.S degree from Xidian University in 2021.

, advised by Prof. Mingyuan Zhou. I’m also work closely with Dr. Ruqi Zhang, an Assistant Professor at Purdue University. I received my M.S degree from Xidian University in 2024, advised by Prof. Bo Chen. Previously, I obtained my B.S degree from Xidian University in 2021.

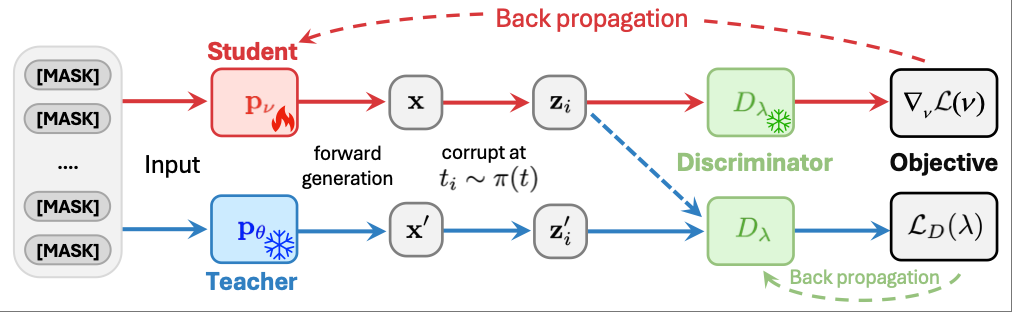

My research interests lie in the general area of probablistic modeling, particularly in solving real-world problems through advanced Generative AI systems. My recent research focuses on Generative Modeling, including its theory exploration and various applications in data generation (e.g., dLLMs) and multimodal learning. I am currently exploring all dimensions of diffusion Large Language Models (dLLMs), including foundation-model design, inference acceleration, and post-training for downstream tasks.

If you share the same research interests with me, feel free to reach out or add my WeChat.

I’m actively looking for internship opportunities starting in Summer 2026. Feel free to reach out if you’re interested in my research.

news

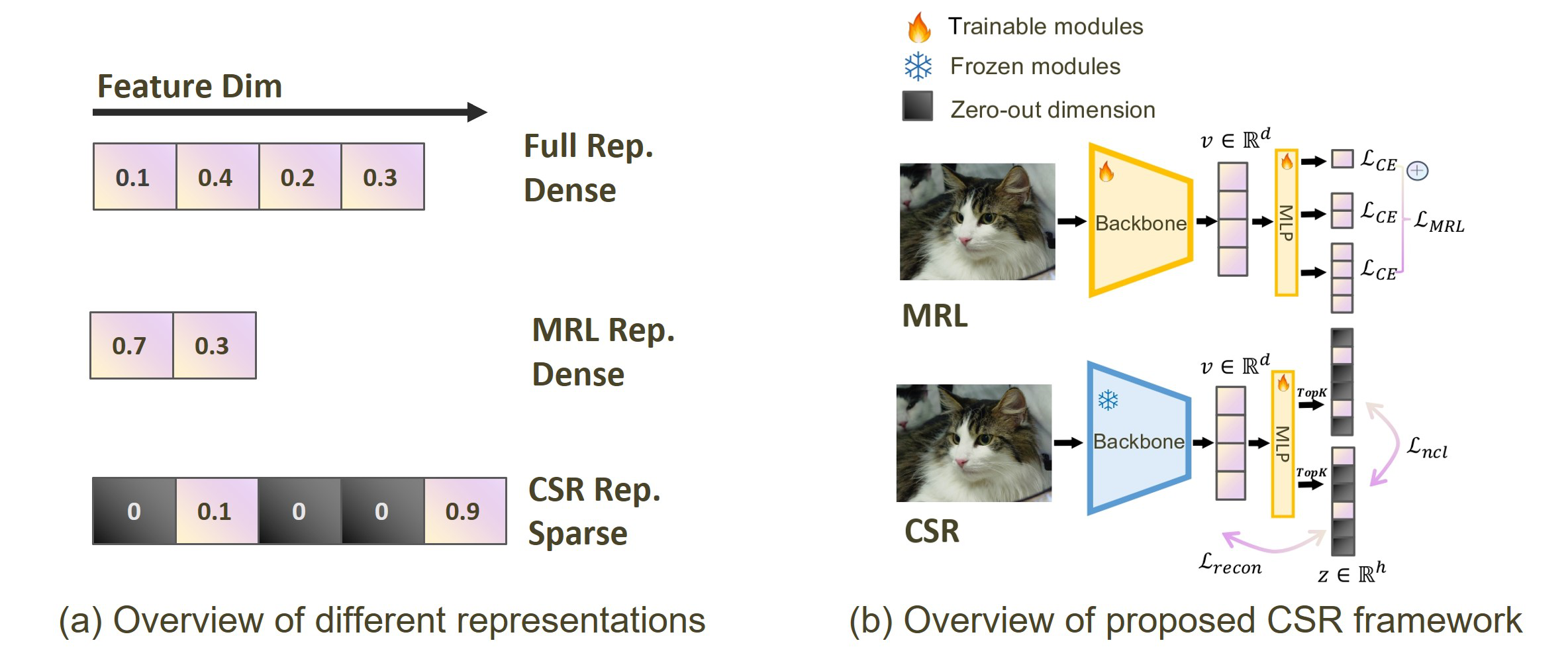

| Jun, 2025 | Our paper “Beyond Matryoshka: Revisiting Sparse Coding for Adaptive Representation” has been selected for an Oral Presentation at ICML 2025! Big congratulations to Tiansheng! |

|---|---|

| Mar, 2025 | I’m thrilled to accept the offer from UT-Austin and can’t wait to enjoy the legendary barbecue 🔥🍖🤠 in Austin! |

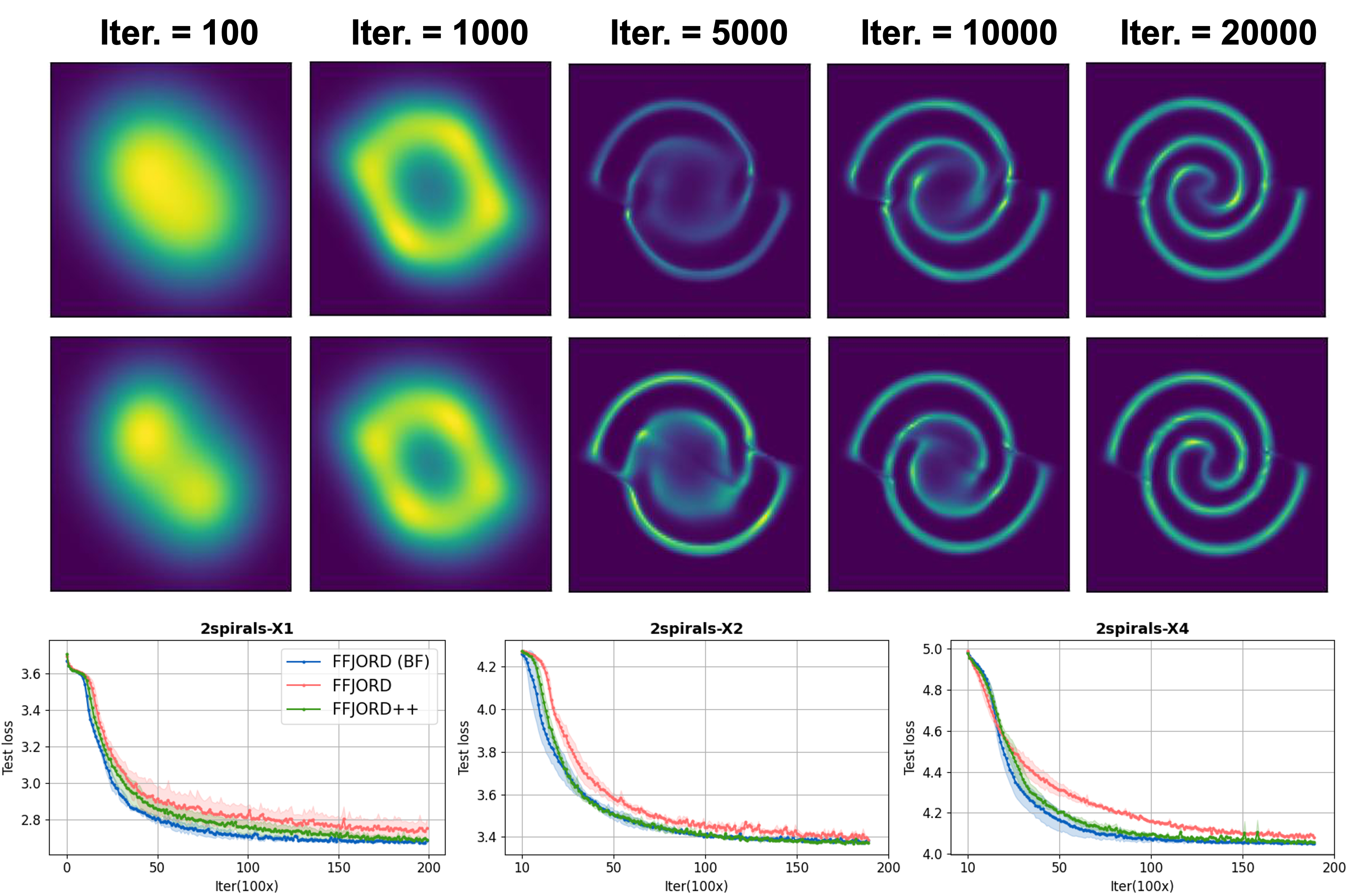

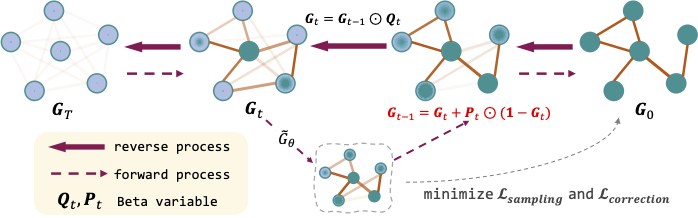

| Jan, 2025 | Happy Birthday🍰🕯️👑 The best gifts come from two accepted papers! Much appreciation to all of my collaborators and advisors! In “Optimal Stochastic Trace Estimation in Generative Modeling” (AISTATS 2025), we leverage the Hutch++ estimator in generative modeling and propose a practical algorithm that amortizes decompositions to reduce costs, while also providing theoretical guarantees specifically in generative modeling context. In “Advancing Graph Generation through Beta Diffusion” (ICLR 2025), we futher explore the potential of Beta Diffusion in graph modeling and propose a novel graph-driven generative process with concentration modulation technique, which makes Beta Diffusion unique again! |

| Jun, 2024 | I graduated with a master’s degree in Xidian University! |

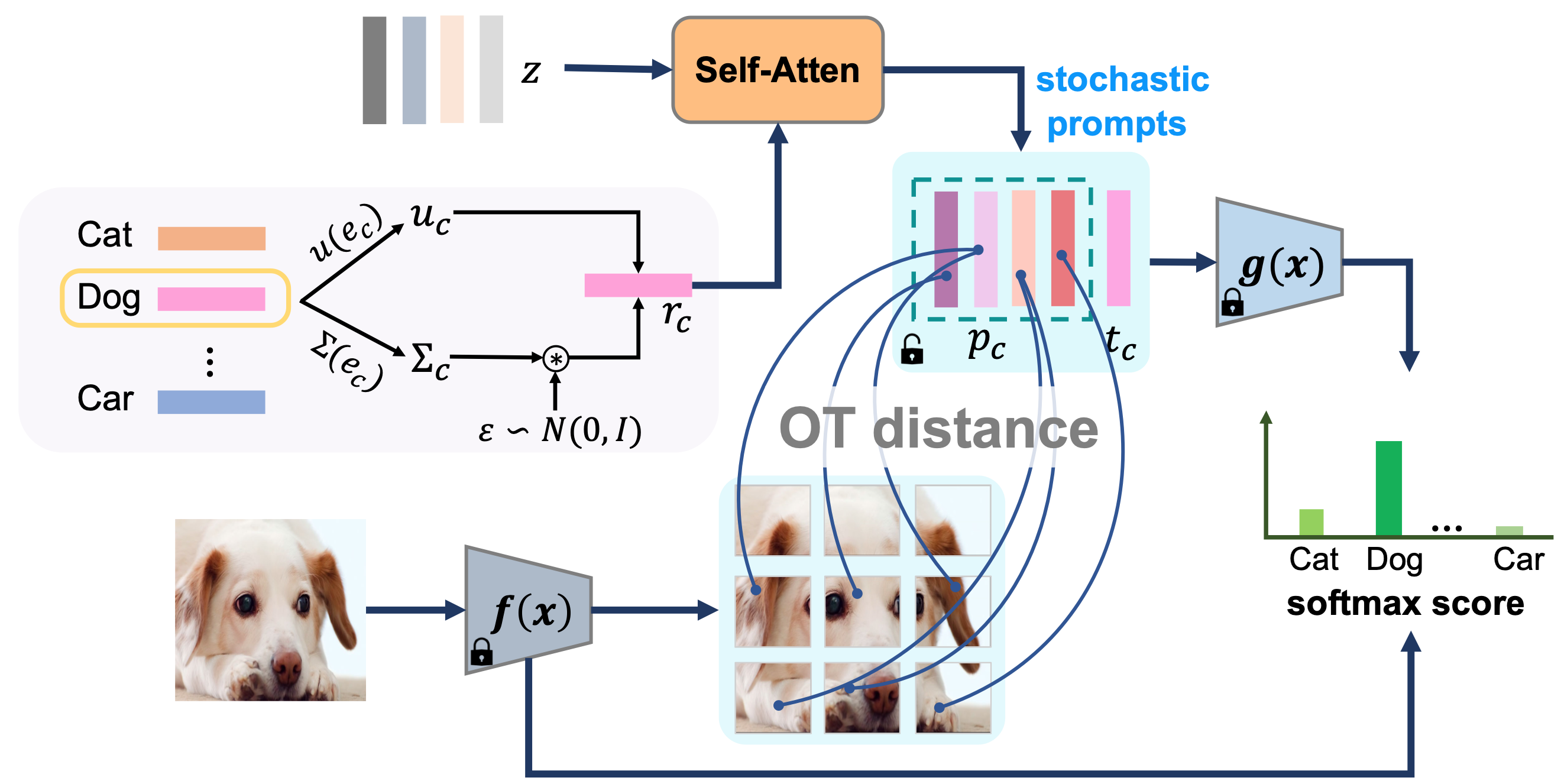

| Apr, 2024 | Our paper “Patch-Prompt Aligned Bayesian Prompt Tuning for Vision-Language Models” is accepted by UAI 2024. |

| Sep, 2023 | Two papers are accepted by NeurIPS 2023! |

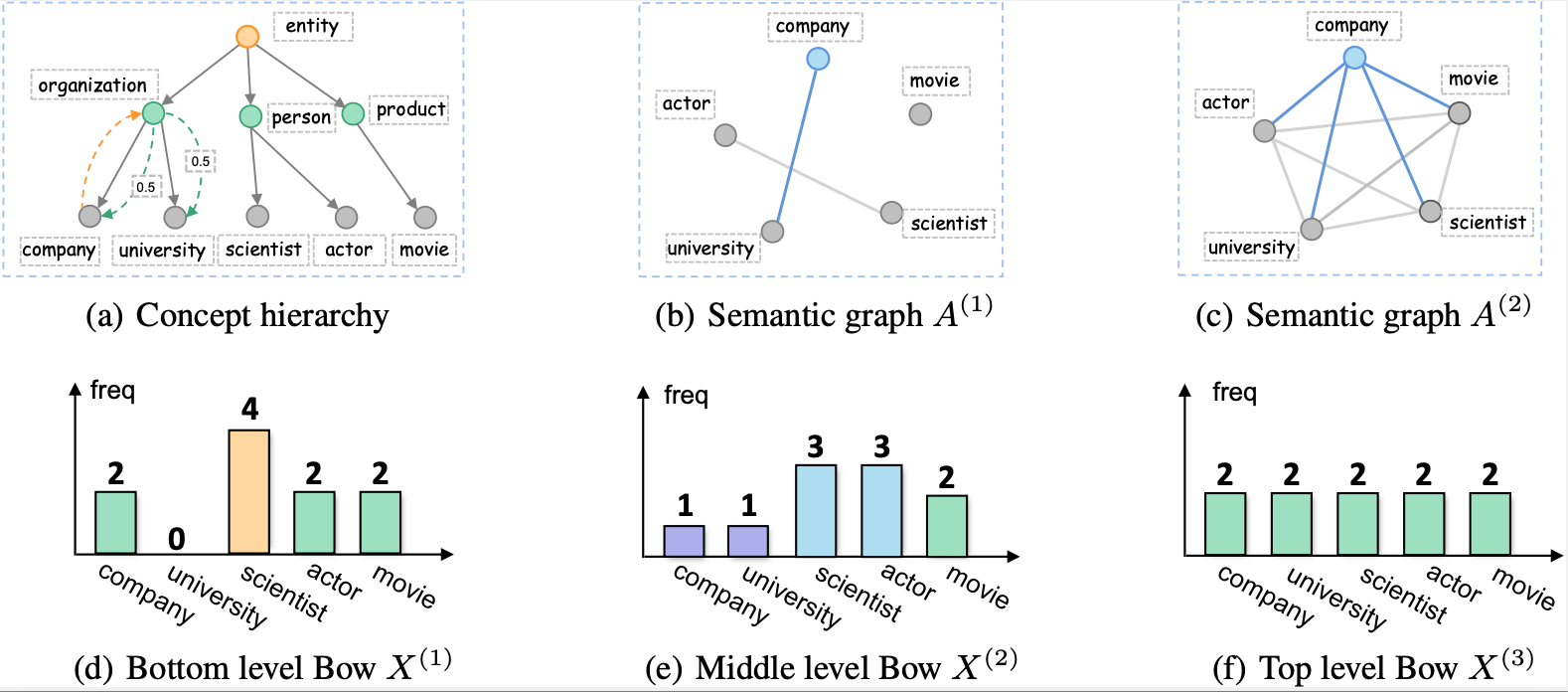

| Apr, 2023 | Our paper “Bayesian Progressive Deep Topic Model with Knowledge Informed Textual Data Coarsening Process” is accepted by ICML 2023. |

| Feb, 2023 | PyDPM is a python library focuses on constructing Deep Probabilistic Models (DPMs). Our developed Pydpm not only provides efficient distribution sampling functions on GPU, but also has included the implementations of existing popular DPMs. |

selected publications

(*) denotes equal contribution